Secured AI Solutions in a Private Cloud Environment

Secured AI Solutions in a Private Cloud Environment describes an approach to building and operating AI entirely within private infrastructure. It enables teams to use AI for internal workflows such as document analysis, speech transcription, and code review while maintaining full control over data, security, and compliance.

Introduction

Artificial intelligence is increasingly used inside engineering organizations to support development, knowledge management, and operational workflows. At the same time, many teams face legitimate concerns related to data protection, regulatory compliance, and operational control when adopting external AI services.

To address these concerns, our company develops and operates secured AI solutions deployed entirely within private infrastructure. These solutions are designed as internal capabilities, integrated into existing workflows, and governed by the same security and operational standards as the rest of our systems.

Core Principle: Private and Controlled Deployment

All AI components described below are deployed on-premises or within a private cloud environment. There is no default routing of data to public AI services.

This approach allows us to:

- retain full control over data flows,

- enforce enterprise authentication and authorization,

- apply internal security and compliance policies,

- ensure predictable operational behavior.

AI is treated as part of internal infrastructure, not as an external dependency.

Internal AI Platform Overview

Our internal AI platform consists of three main building blocks: a large language model, a speech-to-text model, and an embedding model. Each component is deployed locally and accessed through internal APIs.

Large Language Model (LLM)

The locally deployed LLM is used for internal workflows, including:

- conversational interfaces for internal assistance,

- summarization of documents, emails, and meeting transcripts,

- structured information extraction (for example, producing JSON fields or forms),

- drafting internal technical documentation and communications,

- Retrieval-Augmented Generation (RAG), where responses are grounded in internal knowledge sources.

Key characteristics:

- execution within private infrastructure,

- access controlled through corporate identity providers,

- configurable prompt templates and limits,

- monitoring of latency, throughput, and error rates.

The model is not exposed publicly and is accessible only to authorized internal services and users.

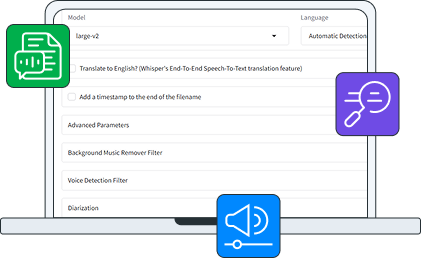

Voice-to-Text and Automatic Speech Recognition (VTT/ASR)

A locally deployed speech recognition model is used to convert audio into text for internal use cases such as:

- meeting and call transcription,

- processing recorded voice messages,

- optional enrichment with timestamps and segmentation.

A typical processing pipeline includes:

- audio ingestion,

- normalization and resampling,

- transcription and post-processing,

- generation of text output with available metadata.

Audio data remains within the private environment throughout the entire pipeline.

Embedding Model

The embedding model converts text and documents into vector representations. These vectors are used for:

- semantic search over internal content,

- retrieval for RAG workflows,

- similarity matching and deduplication,

- clustering and relevance ranking.

The typical process includes document chunking, embedding generation, storage in a vector index, and retrieval of the most relevant segments for downstream processing by the LLM.

Security and Data Governance

Because all AI models are deployed locally:

-

- data remains inside the private network by default,

- access is enforced using enterprise Single Sign-On (SSO) and role-based or attribute-based access control,

- requests pass through internal APIs or gateways with auditing and rate limiting,

- secrets are managed using standard secure storage mechanisms,

- internal traffic is encrypted using Transport Layer Security (TLS).

These controls align AI usage with existing security and compliance practices rather than introducing separate exceptions.

Operational Management

The platform is operated using standard production practices:

- monitoring of latency, error rates, and resource utilization,

- centralized logging and request tracing across services,

- controlled versioning of models with rollback procedures,

- separation of environments such as development, QA, and production where applicable.

This ensures that AI components behave predictably and can be maintained using established operational processes.

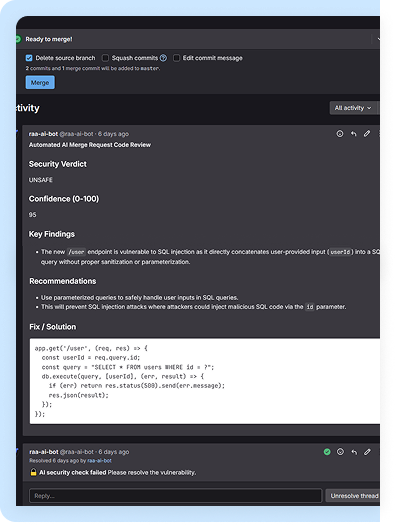

Applied Internal Solution: AI-Assisted Code Review for GitLab

One of the internal solutions built on top of this platform addresses code review workflows in GitLab.

Context

GitLab Merge Requests serve as the primary review mechanism before code is integrated into the main codebase. In teams that maintain high-quality standards, each Merge Request acts as a final checkpoint for correctness, security, and maintainability.

As development velocity increases, reviewers face growing pressure

to identify subtle issues such as:

- accidentally committed credentials,

- inefficient queries or performance regressions,

- minor oversights that accumulate into technical debt.

Repeated identification and explanation of similar issues can reduce the effectiveness of reviews over time.

Solution Description

The Right&Above Engineering Assistant is a self-hosted internal service that automatically participates in Merge Request discussions.

It uses the locally deployed AI models described above and operates entirely within the private environment.

Core functionality:

- analysis of code diffs with a clear verdict, where critical issues must be resolved before merge,

- suggestions for code improvements related to readability and maintainability,

- on-demand explanations when developers mention the assistant in comments, describing why an issue matters and how it can be addressed.

Operational Characteristics

- fully deployed within private infrastructure,

- no transmission of source code or metadata to external services,

- deterministic behavior based on controlled prompts and settings,

- automatic re-analysis when new commits are added to a Merge Request,

- adapts analysis depth based on the scope of changes.

The assistant supplements, rather than replaces, human reviewers.

Observed Effects

During internal usage, the assistant identified issues such as unintentionally exposed credentials and potential client-side vulnerabilities, and provided numerous suggestions related to code clarity and performance.

By handling routine checks, it allowed reviewers to spend more time on architectural and design-level discussions.

AI That Works

Our approach to AI focuses on secured, internally operated solutions deployed in private infrastructure. By building a shared AI platform and layering practical internal tools on top of it, we integrate AI into daily workflows without compromising control, security, or compliance.

These solutions are designed to support teams, reduce operational friction, and improve consistency, while remaining fully aligned with enterprise security requirements

Your writing is still not being checked on uat.rightandabove.com

Are you communicating at your best? Switch the extension back on and be confident about your writing.

and internal governance standards.

Have questions?

We have answers.

Contact us for additional information about the company, our solutions, and more. Feel free to call or drop us an email.

Thank you for

contacting us!

We have received your message.

Your message has been received and we will be contacting you shortly to follow-up If you would like to speak to someone immediately feel free to call.